Originality.ai is an AI content detection and plagiarism checking tool used mainly by publishers, agencies, and content teams reviewing large volumes of text. At a surface level, it promises something straightforward: estimate whether a piece of writing is human written, AI generated, or reused from existing sources.

But the reason tools like Originality.ai exist has very little to do with the tool itself.

AI generated content is now everywhere. Articles, blog posts, marketing copy, reports, even academic drafts are routinely produced with some level of AI assistance. As this became normal, a deeper problem surfaced. The boundary between human and machine writing is no longer obvious, even to experienced readers.

Originality.ai sits directly inside that uncertainty. Looking at how it behaves tells us less about whether it is “good” or “bad” and more about how AI detection works at all, and where it fundamentally struggles.

This article examines how Originality.ai works in practice, how accurate it is, and where it struggles. It covers real testing, false positives, comparisons with other AI detection tools, pricing, privacy, and what to do if human writing is flagged.

What is Originality AI?

Originality.ai is an AI content detection and plagiarism checking tool designed for agencies, publishers, and SEO teams that review large volumes of written content. Its role is to estimate whether text resembles AI generated output, reused material, or human writing based on observable patterns.

In practice, Originality.ai is built for screening at scale, not for resolving individual authorship disputes. It combines AI pattern detection with plagiarism checking so teams can assess content quickly and decide what requires closer human review. This operational focus explains why it is widely adopted in publishing and agency workflows, where efficiency matters more than absolute certainty.

How Originality AI Works

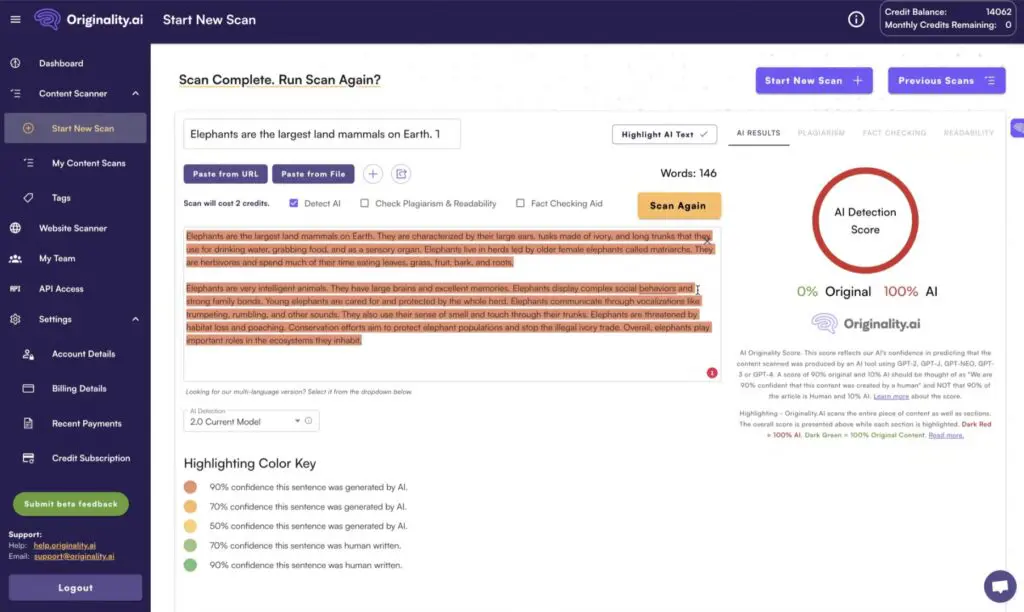

Originality.ai analyzes text by comparing it against known AI generated writing and human written samples. It looks for statistical signals such as predictability, structural consistency, and sentence level patterns, then assigns a probability score indicating how closely the text matches AI output.

The system does not evaluate intent, context, or writing history. It cannot determine whether a human drafted the text, edited AI output, or collaborated with a language model. Like other AI content detection tools, it evaluates only the final surface of the writing. It measures how the content behaves, not how it was produced.

What LLM Models Originality AI Can Detect

Originality.ai is designed to detect content that resembles output from modern large language models, based on shared linguistic and structural patterns rather than model identification.

It is commonly tested against, and capable of flagging content similar to outputs from well-known LLM families, including:

- OpenAI GPT models (ChatGPT, GPT-5 and related versions)

- Anthropic Claude models

- Google Gemini models

- Meta LLaMA models

- Other popular generative text models with similar architecture

Originality.ai does not identify which specific model produced a text. It estimates likelihood based on how closely the writing matches patterns typically found in contemporary LLM outputs.

Is Originality AI Accurate? What Testing Actually Shows

Originality.ai is accurate at detecting unedited AI generated content, but its reliability decreases as writing becomes edited, hybrid, or stylistically consistent human prose. This limitation is not unique to Originality.ai. It reflects how AI content detection works in general.

Accuracy is often discussed as a percentage, but that framing misses what detection tools actually do. They do not verify authorship. They estimate how closely a piece of writing matches known AI generated patterns.

In straightforward cases, Originality.ai behaves predictably. Content produced directly by large language models with little or no human editing is usually flagged with high confidence. That outcome is expected and largely uncontroversial.

The more revealing behavior appears when writing moves away from those clean cases. Edited AI text, hybrid drafts, and purely human writing with consistent narrative structure all complicate the signal. In those situations, accuracy stops being a fixed number and becomes something that must be interpreted in context rather than accepted at face value.

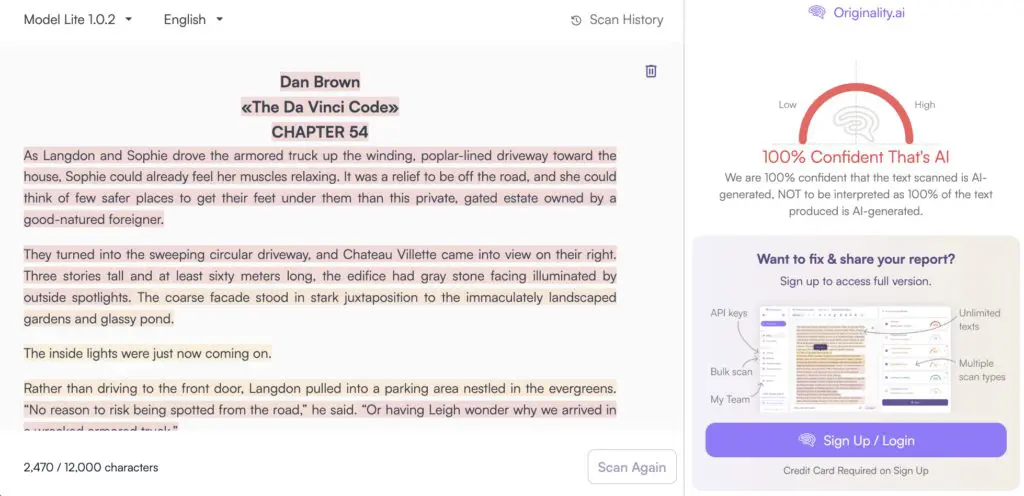

Testing Originality AI on Known Human Writing

To see how far pattern recognition can stretch, we tested Originality.ai on a passage from The Da Vinci Code, Chapter 54. This is a human written novel, published long before modern generative AI existed.

The result was a 100 percent AI generated classification.

This is not evidence that the writing resembles modern AI. It is evidence that detection systems respond to predictability. The prose in that chapter is steady in rhythm, consistent in sentence length, and narratively efficient. Those qualities overlap with how language models generate text.

Originality.ai is not wrong in a technical sense. It is doing exactly what it was built to do. It is measuring structure, not origin.

This kind of false positive is not unique to this tool. Similar results appear when testing other detectors, including GPTZero. The example matters because it shows a deeper limitation: detectors evaluate how writing behaves, not who produced it.

Originality AI vs Other AI Detection Tools

Originality.ai is often compared to other AI detection tools, but those comparisons only make sense once you understand what each tool is actually built to do.

Tool | Primary Focus | AI Detection Strength | Plagiarism Detection | Best Use Case |

Originality.ai | AI detection + plagiarism | Strong on unedited AI content | Yes (web-based) | Agencies, publishers, SEO teams |

GPTZero | AI detection only | Strong on pure AI text | No | Education, individual checks |

Turnitin | Academic integrity | Conservative AI detection | Yes (academic database) | Universities, schools |

Copyleaks | Enterprise AI + plagiarism | Strong across multiple models | Yes (multi-language) | Enterprise content operations |

Key Takeaways from the Comparison

- Originality.ai combines AI detection with plagiarism checking, which most standalone detectors like GPTZero do not.

- GPTZero’s strength is focused AI detection with detailed feedback, but it lacks integrated plagiarism tools.

- Turnitin excels at academic plagiarism, and its AI detection is tailored for that environment.

- Grammarly is primarily a grammar and writing assistant, with AI detection as a supplementary feature.

- QuillBot’s AI detector covers major model outputs but is still secondary to its paraphrasing and rewriting focus.

What to Do If Originality AI Flags Your Human Writing

If Originality.ai flags your writing as AI generated and you know you wrote it yourself, stay calm. This happens more often than people realize.

AI detectors do not know who wrote a text. They only react to patterns. Clear, structured, or formal writing can sometimes look “too clean” and trigger a high score.

Start by looking at which parts were flagged. Often it’s the most neutral or tightly written sections, not the parts with original thinking.

If this is for work or school, explain how you wrote it. Talk through your reasoning, research, or structure. Being able to explain your choices matters more than any percentage score.

If possible, share drafts, notes, or outlines. They show process, which detectors cannot see.

Most importantly, treat the result as a signal, not a verdict. AI detection should prompt review and discussion, not automatic conclusions.

Can You Bypass Originality AI Detection?

In most cases, no. Originality.ai reliably flags unedited or lightly edited AI generated content. However, with heavy human editing, hybrid drafting, or substantial rewriting, detection confidence can drop. As text becomes less statistically similar to raw AI output, the signal weakens, and results become harder to interpret. Some methods users use to bypass Originality AI detection:

- First-person writing lowers detection confidence.

Using “I”, expressing personal opinions, internal reasoning, or subjective reflection introduces irregularity that AI models struggle to replicate consistently. Pure AI output tends to avoid strong personal framing unless prompted heavily. - Dialogue reduces detection reliability.

Quoted speech, conversational exchanges, interruptions, and informal phrasing break the smooth narrative flow detectors expect. AI-generated text usually maintains a steady rhythm and tone, even in fictional dialogue. - Sentence length variation matters more than word choice.

Mixing very short sentences with longer, more complex ones disrupts predictability. Human writers do this naturally. AI tends to maintain consistent sentence length and cadence unless explicitly forced otherwise. - Inconsistent structure weakens pattern recognition.

Switching between reflective paragraphs, factual statements, rhetorical questions, and narrative sections reduces statistical uniformity. Detectors perform best when structure remains stable throughout the text. - Subjective framing beats neutral exposition.

AI excels at neutral, informational writing. Adding uncertainty, hesitation, opinion, and personal judgment introduces signals that are harder to classify as AI-generated. - Minor punctuation errors reduce confidence further.

Small punctuation irregularities such as missing commas, extra commas, unconventional spacing, sentence fragments, or informal punctuation choices disrupt the polished consistency typical of AI output. Language models tend to produce grammatically clean, well-punctuated text by default. Even subtle imperfections introduce noise that weakens detection confidence.

In short, the more irregular, personal, structurally uneven, and imperfect the writing becomes, the harder it is for detection systems to assign high AI confidence. This does not require better writing, only less predictable writing.

Originality AI Pricing and Plans

Originality.ai is a web-based platform that you access through a browser. It does not have a standalone mobile or desktop app, but it does offer a Chrome extension that lets you run scans directly inside tools like Google Docs or while browsing content.

New users can test Originality.ai in limited ways before paying. The website allows basic AI detection with usage limits, and the Chrome extension typically provides a small amount of free credits so users can try the detection features before committing to a plan. These free options are meant for testing, not ongoing use.

For full access, Originality.ai uses a credit-based pricing model:

- Pay-as-you-go: One-time credit purchases, such as bundles around 3,000 credits for a fixed price, useful for occasional or one-off scans.

- Monthly subscription: Plans starting at approximately $14.95 per month, which include a set number of credits and are better suited for regular use.

- Enterprise plans: Larger credit allowances, API access, team features, and priority support, designed for agencies and organizations scanning content at scale.

Credits are consumed based on how much content you scan and which features you use, so costs scale with usage rather than being fixed.

Privacy and Data Handling in Originality AI

Originality.ai needs to process the text you submit in order to run AI detection and plagiarism checks. If you use the web dashboard, your submitted content and scan results are stored so you can review them later or share them with your team.

If you use the API, Originality.ai states that content is processed for detection and not kept long term, which makes the API a better option for sensitive or confidential text.

The company says it follows standard data protection practices, including compliance with GDPR. Like other AI detection tools, it analyzes writing patterns but does not try to link submitted content to personal identity.

If you are working with confidential or proprietary material, it’s best to review their current privacy policy before uploading content.

How to Think About Originality AI

Originality.ai is not a judge of authorship. It is a pattern recognition system operating in a space where the line between human and machine writing is increasingly blurred.

What it reveals most clearly is not whether a piece of content is “AI” or “human”, but how fragile that distinction has become. Predictable writing looks artificial. Irregular writing looks human. As language models improve, that gap will continue to narrow.

Used carefully, Originality.ai helps teams manage risk and focus review where it matters. Used carelessly, it can turn probability scores into misplaced certainty. The tool highlights patterns. The responsibility for interpretation remains human.

Frequently Asked Questions

Is Originality.ai trusted?

Yes, Originality.ai is widely used by publishers, agencies, and SEO teams. Trust depends on how results are interpreted. It is best used as a screening tool, not as definitive proof of AI use.

Is Originality.ai as good as Turnitin?

They serve different purposes. Turnitin is built for academic plagiarism detection with institutional databases. Originality.ai focuses more on AI content detection and web-based plagiarism for publishing workflows.

Does Originality.ai humanize text?

No. Originality.ai does not rewrite or humanize content. It only analyzes text and flags patterns associated with AI generation or plagiarism.

Is Originality.ai better than GPTZero?

Neither tool is universally better. GPTZero focuses on transparent AI detection, often used in education. Originality.ai combines AI detection with plagiarism checking and is better suited for teams reviewing content at scale.

Is Originality AI accurate?

Originality.ai is accurate at detecting unedited AI-generated text. Accuracy decreases with edited, hybrid, or highly structured human writing. Results should be considered probabilities, not final judgments.